Something a little different.

I started to write this a year ago 😉

My day job is in the Kubernetes realm, and I quite like Kubernetes for what it is, but given one of it’s aims is to group the resources of a bunch of machines together, you often need several machines just to ‘try some experiment out’. In my job we just use ‘the cloud’ and I know I am meant to love the cloud because everyone tells me to, but I always feel like I am “Paying Jeff” just to tinker with something and that always seems a bit ‘wrong’ to me. However, there are ways of running Kubernetes locally. You can indeed make up your cluster of machines at home, but often it’d be nicer if you could get away with just running a single system to achieve your test case.

So recently I had a good look at a few local kubernetes alternatives; k3s and k3d and kind . For whatever reasons I liked kind more, so I set about replicating something a bit like I do at work on a single system at home. I have a mix of PC hardware at home running various versions of linux. For a long time I had a bunch of what I would call ‘intel 4th gen’ systems. One of these was a desktop system that I’d continually upgraded over the years until it had 32GB of DDR3 RAM and an i7-4790 CPU. If you know anything about the 4th gen era, then you’d know that those specs are about as good as it gets in consumer hardware for 4th gen. So i was going to use that. It’s old but it has quite a lot of RAM. But certainly, with kubernetes under kind, it’s all on top of docker, I would have a lot of processes running and a lot of load, so maybe it was time to consider an upgrade.

Somewhat related to needing lots of computing power is the rise of all the AI/ LLM stuff over the past year or two. I am undecided where this stuff is all going, but the underlying tech is really interesting. You have quite a lot of open source(ish) large language models now. The big one was ‘llama‘, but deepseek, qwen and others are in the news a fair bit. Initially you could not run these LLMs locally without a very expensive GPU card, but that changed a bit when llama.cpp came out around March 2023. llama.cpp allowed people to use their CPU to run these LLMs somewhat slowly. When I say “run” I mean “inference”.

Anyway, llama.cpp lets you do ‘inference on a CPU’. Of course I had to try this. The first barrier you hit is “What model do I download and from where?”. This is a bit of a minefield for someone new to this. If you poke around the /r/localllama subreddit you can often find suggestions. But you’ll see all this stuff about 7B, 8B, 35B, 70B and so on which at first is quite confusing. I’d go off and read some explanation of LLMs at this point. I often point people at Wolfram’s What is chatgpt doing and why does it work. It’s quite long and starts easy and get’s harder. Usually I tell people to start at the top and read until they can’t understand it anymore 😉 There’s lot’s of other good sources now too. I recently discovered Andrej Karpathy’s Intro to Large Language Models. That’s quite good as well, but probably a bit lighter. I found it had some very important stuff in it though. One thing that resonates with me is how LLM’s are a bit like compression, or in my mind they are like ‘lossy’ compression algorithms like JPEG. There’s a large volume of data that is ‘shoved in’ as part of the training process, and the end result is meant to be something kinda/sorta like the material that was shoved in, but in order to make the LLM a ‘reasonable size’ some amount of ‘quality’ is thrown out.

As an example the ‘llama 3’ models were trained on 15 trillion tokens (tokens are like subsections of ‘words’). But the llama 3 models have been published as a 8B and a 70B. The ‘B’ means ‘Billions of parameters’. The ‘parameters’ are like the ‘weights’ that indicate the correlations between information in the LLM. So very very broadly the 8B model had 8 billion numbers in it , and the 70B had 70 billion numbers in it. Often models are initially published with the numbers stored in an FP16 (16 bit floating point) format, so an 8B using FP16 might take up 16GB of RAM (since 16 bits is 2 bytes). The 70B might then need 140GB. Now the way inference works means that you need to have very fast access to all those billion of parameters to perform a lot of matrix math. The reality is that you have to be able to fit the entire model into RAM otherwise performance is awful. Now, 140GB of RAM is obviously a lot. Even 16GB of RAM for an 8B is a lot as a typical system needs more RAM than that for other operating system processes and the inference program itself.

Thankfully, enthusiasts and researchers have tried reducing the size of these weights/parameters. eg. going from FP16 to an 8 bit integer. Given that these weights are effectively saying ‘the probability of one thing being related to another thing’, reducing them from a 16bit floating point number to an 8 bit integer you would think would mean a large drop off in quality, but it turns out there is very little impact on quality, so the same people have tried lots of smaller numbers; 6 bit, 5 bit, 4 bit , 3 bit, 2 bit and even 1 bit (don’t ask), and other weird combinations where its ‘not quite 4 bits’ and so on. Without going into detail, 4 bit is very common and the quality level is still decent enough for a lot of use cases. Of course going from needing 16 bits per weight to 4 bits is a (roughly) quartering of the size of the LLM. That has two important effects; The entire amount of RAM for the LLM has decreased so an inference program like llama.cpp can perform faster since there is less overall data to traverse across, and because its smaller it fits in to a smaller amount of RAM. This shrinking down to a smaller number of bits per weight is called ‘quantisation’.

So very very broadly a 7B or 8B model at 4 bits might take up 4 or 5GB of RAM. A 35B might be around 21GB. A 70B might be 40 to 42GB. Those numbers become very important. Someone with an 8GB RAM laptop can now run a 7B or 8B model. The 35B will fit in a machine with 32GB of RAM… or if you were using one on a GPU based inferencing system you might have a GPU with 24GB of VRAM, and of course a quantised 35B will fit.

So back to hardware. A 7B or 8B or even a 35B would fit in my i7-4790 with 32GB of RAM. So I tried this (I’ll add here that you generally download all these models from Huggingface ). And I could run these LLMs locally which was very cool. But

- It was slow even with a 7B or 8B

- My 4 core i7-4790 was flat out for minutes. Like really flat out ,400% flat out.

For some people the 2nd point is scary, but I was really impressed. As someone who looks at servers a lot, it is quite rare to see a use case that will simply eat all process resources for minutes. So it was slow and I kind of knew it would be, but you could at least play around with it.

A key outcome here is that I wanted more resources to play with this stuff more. The /r/localllama subreddit is great for people talking about their ‘hacked together testbeds’. Some people spend an astonishing amount of money on this stuff. I’ll quickly highlight some options

- You can ignore trying to ‘run it locally yourself’ and just rent GPUs in the cloud if you’re OK with that. The big 3 are quite expensive, but there are smaller companies like vast and runpod. I’ve only used vast from time to time. You get a docker container running with a GPU passed through. It works great. One other really cool thing about vast is that you can see the CPU you are renting as well, and I’ve ended up renting ones specifically just to benchmark some CPUs.

- You can buy an Nvidia GPU. When I started writing this post, an RTX 4090 24GB was close to NZD$4000 which is quite eye watering. For that you will get really fast performance on LLMs (I’ve rented 4090s to specifically find out). (NB: 5090’s have just come out now too. However they are more like NZ$5500!)

- You can try to get a second hand Nvidia 3090 24GB. In NZ these go 2nd hand around NZD$1500 to $2000 which I also find eye watering. You get great performance as well. Obviously less than a 4090, but in a lot of cases you might not notice too much depending on what you’re doing.

- There are a few other 2nd hand Nvidia options. A very cheap solution was Tesla P40 cards. But like a lot of older Nvidia cards that get popular on /r/localllama, the 2nd hand stocks of these seemingly deplete … and they are no longer cheap. When I started writing this post P40’s were easy to get and relatively cheap, but those days are over. But the P40 is not the only option here. There are P100s with 16GB, and interesting ones like 2080TI cards that have been modified to have 22GB of VRAM. The P40 and P100 also require you to hack together some cooling solution, as they were designed for server cases with flow through cooling and hence have no onboard fan.

- People have been able to get AMD GPUs to work , so too with Intel ARC GPUs, but they are much less popular.

- Lastly is running this stuff on a decent CPU. This is the slowest option but often the cheapest in terms of “You probably want lots of RAM” and while a GPU with 24GB of VRAM (and you reallly need that to play around) is either expensive or requires some hacking (eg. the P40), often getting 32GB of RAM into a motherboard in 2025 is not that hard or as expensive.

So GPUs give you great performance but cost a lot of money (and I’ve left out that you still need to do research to see if your motherboard can take the GPU you want. You need to do research into ‘above 4G’ and ‘bifurcation’ and then these GPUs are so power hungry that you need to look at whether you need an upgraded PSU as well).

So I thought “decent CPU!”

I am very much “I don’t want to spend all this money on a hobby only to find that I am super bored and realise it was a waste of money”. At least going the CPU option I get a faster CPU to do other stuff and there’s also a bunch of Kubernetes related stuff I want to do, and a good CPU and lots of RAM will go a long way with that.

So I actually started renting machines on vast.ai. Not to test out LLMs on GPUs, but to test out LLMs on CPUs because like I said earlier “you get to see what CPU you are renting”. So I would load up llama.cpp running in CPU mode (Just noting that over the past year llama.cpp has had so many features added including ; running on a GPU, splitting across more than one GPU if the model is large, splitting the model between CPU and GPU) and then I loaded up a mistral-7b-instruct-v0.2.Q4_K_M.gguf model (which is old now in 2025). Most benchmarks will show prompt processing speed (ie. the speed of processing what you typed in plus some other stuff you may have typed in earlier) and the ‘generation speed’. I was only interested in the generation speed. And llama.cpp will happily print out a tokens/sec speed when you have a query processed.

I’ll note here that I tested this same mistral 7B on some other machines first

– A raspberry pi 5 8GB . It did 2 tokens/sec

– My i7-4790 / 32GB DDR3 RAM thing. It did 4 tokens/sec

– A Lenovo laptop with a Zen 3 7530U / 16GB DDR4 RAM. 6 tokens/sec

I was able to rent a machine with a Intel 13900K CPU, which at the time of testing was one of the fastest CPUs you could buy. It did 14 tokens/sec on this simple test. I always say ‘anything above 10 token/sec is awesome’. So that was awesome, but a 13900K is very very expensive as well. In the midst of my ‘searching for interesting CPUs to try’ I saw an AMD 7600X. So that is Zen 4, and I know from work that Zen 4 servers are generally impressive. Anyway, that was able to do 12 tokens/sec. And a 7600X is not exactly a budget CPU, but its a lot cheaper than a 13900K.

So that’s what I decided to get. 12 tokens/sec vs the 4 tokens/sec seemed like a big step up. Sure a 7B or 8B model is kind of ‘the bottom’ in terms of LLM quality … but it would be a big step up. I ended up getting a Gigabyte motherboard, the AMD 7600X, a cooler and 2x16GB 6000MT/s DDR5 DIMMs running with XMP (the motherboard could take 4 DIMMs, but I’d read that 4xDDR5 often works worse than 2xDDR5). In hindsight I should have either spent more to get 2x32GB or 2x48GB, but I was trying to contain my budget.

Anyway, I finally had a system that I could do some faster CPU based LLM inference on , although I was limited to models that would fit into 32GB. And it could also run some kubernetes stuff for me too. It is a very good system. I can’t recommend the 7600X enough.

But of course I wanted more.

I was wanting more RAM for my kubernetes experiments … but llama 3 had just come out and that llama 3 70B kept on getting fantastic write ups … but I could not run it locally. You need at least 40 GB to run 4 bit. I rented some containers on vast a few times, and I could definitely see the llama 3 70B 4 bit was quite a step up. I’d also started using Claude 3 Opus at work, so my brain kept on comparing the two … and hey everyone wants the open source LLM world to catch up to the commercial ones … and this was very ‘close’.

So what to do. I had seen all these cheap X79 and X99 motherboards on aliexpress for a while now. They’re the kind of ‘cheap thing’ that sounds cool to me, but there’s a mix of positive and negative reviews for these things. If you are unfamiliar with them. It’s like all the old servers in the world were stripped of their Xeons’s and old DDR3 ECC and DDR4 ECC ram and ended up in China. For some reason, some motherboard manufacturers in China decided to make new motherboards for these chips. So old Xeon, plus old RAM plus new weird motherboard. Quite often these new motherboards would have features that never existed when these old Xeons came out, most noticeably M.2 NVME slots. And they are cheap and possibly the thing that piqued my curiousity was ‘quad channel memory’.

See, if you scour the llama.cpp issues section or /r/localllama you’ll discover that in terms of running LLMs on CPUs, its not the fastest CPU that wins. It generally comes down to ‘memory bandwidth’. ie. How fast can you transfer things between the CPU and the main RAM memory. You’d think surely the CPU speed is a factor, but not as much as you think. Think in terms of ‘the inference program is manipulating so much data in RAM on such a wide scale that all the caches in the world won’t help. Anyway “Quad channel” sounds awesome for getting things faster to/from memory. I knew vaguely that ‘quad channel on an old Xeon’ probably wasn’t as fast as some more modern stuff, but I got a bit lost in the details.

Of course there was an Aliexpress sale at the time. I saw an X99 motherboard with 4x32GB DDR3 ECC DIMMs (so 128GB of RAM) for a really great price. It was some Jingsha brand thing . It was an 8 slot DIMM motherboard so my brain is going ‘Obviously it can take 8x32GB = 256GB’ which would be awesome! I asked the seller if they’d sell it to me with 256GB and the deal was done. It was definitely cheap for ‘anything with 256GB of RAM’, but it was probably the most expensive thing I’ve ever bought on aliexpress. The seller said they’d test it and send it out.

So the board turned up. It had the 8x32GB and Xeon already inserted in the board. I think I had to wait a few days for my cheap Xeon cooler to turn up. Then I tried powering on. It has one of those two digit 7 segment LED POST error code thingees on the motherboard. It flickered through some numbers and eventually I had a picture on the cheap video card I had plugged in. Some mad pressing of the key to the BIOS and I could see the amount of memory detected. It said 128GB. But clearly the board had 256GB plugged into it. For me I then go into troubleshooting mode. I swap DIMMs around. I reseat them. I check the Xeon. So I ended up rebooting this thing a lot. I am trying various things for a few hours and then it just refuses to boot. I get an error like B6 on the LED display and it’s stuck. Its just decided to give up. Damn.

I reach out to the Aliexpress seller. They get me to try things. Because of the time difference this drags on for a week or two, and at the back of my head I am thinking this is not going to end well. The seller was not helpful at all … and I go into ‘cut my losses’ mode. I noticed on my local auction site an auction for an old Supermicro server board, an X9SRL-F that conveniently took the same DDR3 ECC RAMs I had. The Supermicro board actually came already with 8x8GB DDR3 ECC and an E5 2650L V2 10 core Xeon (which was older and slower than the one in the X99 board) and a low profile blower fan heatsink (for a 1U case). After some bidding I won the auction. I thought it was still a bit of a bargain. A bit over NZD$100 for a 10 core machine with 64GB of RAM.

So the X9SRL-F turns up and I test it out. It’s actually a little nicer than the X99 board in that it has built in VGA and IPMI. There’s no NVME though, but I have some PCIE NVME adapters that I’ve used in the past anyway. It boots up, the 64GB checks out and it all works but gosh these blower fans are the loudest thing ever. Obviously my office is not a data centre, and the fan was just obnoxiously loud. I turned it off.

The Supermicro board had a narrow LGA 2011 socket rather than the more normal wider one on the X99 board. That just meant the heatsink holes were different and importantly I could not use the fan I had for the X99 board on this one. I ordered the right LGA 2011 mount off aliexpress , but in the mean time I thought ‘Surely I have some old heatsink bits and pieces that I could hack together to make this go’ and I’d read somewhere that AM4 or AM5 mounting holes are very similar to this narrow LGA 2011 thing. So I took the blower fan off and I had some bolts that looked like they might fit and I was screwing something down into them when I thought ‘this is going a bit too far, I should stop’. That’s when I noticed that these heatsink bolt holes on the LGA 2011 metal mounting plate are only designed for the bolts to go down a few millimetres and stop. The key issue is that the actual Supermicro PCB is directly underneath. ie. they did not design the board so that the heatsink bolts would go all the way through.

I thought “I hope I haven’t damaged the PCB”

Anyway, I park it for a while, and then the proper narrow LGA 2011 bracket turns up and I attach the fan to the X9SRL-F and boot it up and the onscreen POST number stops at B7 and does not get any further. Doh. I eventually work out that if I remove the two DIMMs closest to the Xeon on the power connector side that it does boot up and detects 192GB of RAM (ie. 256-32-32). Doh. Doh. Lots of fiddling and rebooting later and I still cannot get it to work with the full 256GB. I park it for a while.

Eventually I remove the 4 torx bolts that hold the LGA 2011 bracket down, and finally I see the results of my eager heatsink bolt attempts.

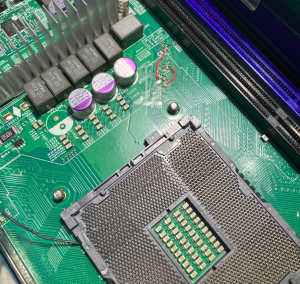

Yep, the burr mark (below where it says CPU1 and to the left a bit) there is the end of the bolt damaging the traces on the PCB. The traces must have gone to the 4th memory channel which is the two DIMMs closest on that side.

Some mad searching the internet for ideas of what to do. Sure 192GB of RAM is still a lot, but I was really keen to have quad channel RAM, so I didn’t want to give up on the 4th channel.

At one point I came across a video with some comments similar to my bolt damage with a comment that ‘people handy with a soldering iron might be able to repair it’. It’s hard to see from the photo but these traces are super tiny. But I decided to have a go:

I don’t have a proper magnifying setup so it was a very hit and miss affair trying to scrape off the green stuff, get some flux in there, and get enough solder to ‘take’ so I could solder these kynar wires down. Initially I had one of the two broken DIMM slots working … and then finally after a few more goes … I got the 2nd one going. All 256GB of RAM detected. I was amazed as my soldering job was quite awful.

So that got me a 10 core V2 Xeon with a low clock speed (E5 2650L), and 256GB of RAM. I set it up with a disk running linux and finally I was able to try the llama 3 70B 4 bit that I wanted to try. I could get 0.7 to 0.8 tokens/second. Oh well ;-). At least it works … but its certainly slow. I was curious to see what the memory bandwidth was actually like. The only decent linux program I found for this was stressapptest by google. It’s designed to stress memory out, but also prints out a memory bandwidth figure. With my quad channel setup I got about 29GB/sec which seemed low to me. If I removed half the RAM (ie. one DIMM per channel) and hence reduced some of the ‘load’, then the speed went to 35GB/s. That still didn’t make a great deal of difference in inference speed. I went back to the 7600X system and ran the same stressapptest. It got 61GB/sec. Wow. that DDR5 with XMP really powers along … and that’s just with two DIMMs. So what I’ve realised now is that the 7600X system with say 2x32GB or 2x48GB would have been the better choice to run these bigger models.

I did actually order a faster CPU for the Supermicro board ; a E5-2697 V2 which is 12 core and has a higher base clock. The most interesting thing is that the LLM performance is the same as the slower E5 2650L. Like I said , memory bandwidth is the bottleneck. The fast CPU was only NZD$32 or so, so it’s not really money wasted and will help out with the kubernetes stuff I want to do.

So, running a kind (kubernetes) cluster on this machine is rather good. Given the type of test loads I run, I often hit the limits of my 12 core CPU. Maybe 2 CPUs might have solved that, but it is a good test bed for that sort of thing.

But for LLM stuff the setup was quite poor, and I started to think about possible options. An interesting one is the modified Nvidia 2080TI cards. The story is basically ; some company in China buys up old 2080ti 11GB cards (they only came with 11GB), desolders the 11GB of VRAM, and solders back in 22GB of VRAM. So you end up with a cost effective way to get over 20GB of VRAM. The prices of these cards vary a lot. You can find them on ebay and aliexpress, but I noticed if I went directly to taobao they are much cheaper. On taobao these modded cards had ‘list prices’ of around NZ$620 which is still a decent chunk of money but way way cheaper than other options like a 3090 or 4090. Anyway, after much umming and arring I decided that I really wanted two of these cards so that I would have 40GB of VRAM , which would mean I could run a 70B in 4 or 5bit, but given my nervousness about ordering one of these, I opted to buy one first. If it turned up and worked good, I would order a second one.

So I ordered one. Now taobao is all in Chinese and even though people outside of China can order stuff on it, it is generally focussed on domestic sales within China. So you have to run your browser in translate mode. I fumbled my way through the ordering process, but it ended up way more expensive than NZ$620. NZ GST was added (which is 15%). Shipping was added which I think was NZ$32 or so, then there was some weird payment fee of $20, then the exchange rate for a foreign credit card is not good .. and my NZ$620 became NZ$750 when I actually clicked the BUY button. Then I waited. I didn’t realise it, but I had somehow hit the button to send via ship, not plane. But an odd thing happened some days later. I started getting weird phone calls from unknown numbers in Sydney. I never answered them, but then the weird phone calls started coming from Hong Kong. I initially ignored these too, but finally answered one. It was the shipping company. They said I needed to pay an NZ$35 customs fee. So, yes, a completely unknown number calls and says ‘give us some money’ ;-). But the Hong Kong person told me to go to Taobao messages. This I had never looked at before, and finally found it. The message wanted me to do a direct bank to bank transfer in NZ for the $35, and to screenshot my payment and upload the screen shot into the chat. So the next challenge was uploading a screenshot. This didn’t seem to work at all. I think I tried lots of different computers and none seemed to work. I eventually got it to work on a Mac (but later I think it may have been related to the DNS from my pihole). The customs agent via taobao messages said the package was ‘released’ and then I waited .. for possibly a month, and then weirdly I started getting txts in Chinese in NZ saying that my parcel would be delivered … on an upcoming Sunday which was a little odd … but sure enough it was delivered on the Sunday before Christmas.

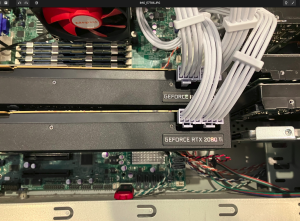

So I finally had a 2080ti 22GB card. Mine was a leadtek branded one with a blower fan. I was umming and arring about whether to put the card in the 7600X machine or the supermicro system. I had actually bought a new modern case ahead of time thinking all GPUs are really long, and the old case I had would not be able to fit the 2080ti. But it turns out that the 2080ti is much shorter than you think, and when I bought the newer style case and put the 7600X system in it, I ended up putting the supermicro system in this older tower case (with 5 1/4 and 3 1/2″ front slots). The 2080ti fitted in the older case . I also had sourced a larger capacity PSU locally. Given that I was aiming to have two 2080ti’s, I ended up buying a 1000W Lian Li PSU.

So did it work? Yes it did. Yes it went faster. Yes I didn’t write down any benchmarks because … I then ordered a 2nd card.

I spoke to the same taobao seller this time, and organised to get an NVLink as well. I got the impression that an NVLink would improve performance, and it is one of the luxuries of older NVidia cards, as the nvlink connector was removed in the 4090 and beyond. So this cost a bit more this time (around NZ$800 all up). And I again, accidentally hit the button to send by ship. After the weird ordering process of the first card I was all prepared to answer weird anonymous calls from Sydney and Hong Kong ;-). Again, I had to transfer NZ$35 to a bank account. I did that but I had a huge issue with uploading the screenshot. Previously I had only used taobao on a browser on a desktop machine, and accessed ‘taobao messages’ from there. But this time when the shipping people called me from Hong Kong, they too said that they had left me a message on taobao messages, but it wasn’t there. On a whim, I installed the taobao app on my phone. Finally I could see the message from the shipping agent (ie. logged in on the taobao app on a phone I could see a lot more messages compared to if I logged in in a browser on a PC). Of course, the next problem was that the taobao phone app would not let me upload a screenshot 🙁 . I then just reached out on the leftover taobao messages chat from ordering the 1st 2080TI. I spoke to the shipping agent on that chat thread, and they let me upload the screenshot to them (via a browser connection) and ‘released’ the parcel.

And the 2nd 2080TI 22GB turned up (about a month later) with the NVLink.

Yes the case is over 20yrs old I think. Yes I am using a pci-e 1x slot with an NVME adapter, yes the orange SATA cable is for an extra drive I needed to plug in, yes all the older drives towards the front of the case are disconnected, and yes I am using a clover boot to allow me to boot from the red USB stick then switch to the NVME drive.

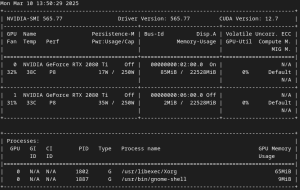

Here’s the obligatory nvidia-smi screenshot

So how does it go with llama.cpp?

Previously I had tested with this older LLM ; mistral-7b-instruct-v0.2.Q4_K_M.gguf . So same kind of test, but running the latest llama.cpp (in March 2025), with the two 2080TI 22GB cards with NVLink fitted, and the full 41 layers offloaded, I get generation speeds of:

mistral-7b-instruct-v0.2.Q4_K_M.gguf : 22.5 token/s

And for some recent LLMs for comparison (and yes there is something weird with the old mistral 7B, as I get faster generation on some modern 32B models)

DeepSeek-R1-Distill-Llama-70B-Q4_K_M.gguf (all 81 layers offloaded, but need to use -nkvo): 9.8 token/s

DeepSeek-R1-Distill-Qwen-32B-Q4_K_M.gguf (all 65 layers offloaded): 23.9 token/s

Qwen_QwQ-32B-Q5_K_M.gguf (all 65 layers offloaded): 21.2 token/s

Llama-3-Instruct-8B-SPPO-Iter3-Q4_K_M.gguf (all 33 layers offloaded): 86.1 token/s

gemma-2-27b-it-SimPO-37K-Q4_K_M.gguf ( all 47 layers offloaded): 28.5 token/s

phi-4-Q5_K_M.gguf (I think this is a 14B. All 41 layers offloaded): 45.6 token/s

Mistral-Small-24B-Instruct-2501-Q8_0.gguf ( all 41 layers offloaded): 22.4 token/s

So that’s all quite good. The 70B is right on that 10 tokens/s border. It’s good enough to work with. Of course the latest news is QwQ 32B being the cool kid, and that runs twice as fast (though spends more time thinking).

One more thing. A 2080TI is quite impressive for gaming as well 😉